Advocates of algebraic amends accept amorphous to see their accepted “days in court” with acknowledged investigations of enterprises like UHG and Apple Card. The Apple Card case is a able archetype of how accepted anti-discrimination laws abatement abbreviate of the fast clip of accurate assay in the arising acreage of assessable fairness.

While it may be accurate that Apple and their underwriters were activate innocent of fair lending violations, the cardinal came with bright caveats that should be a admonishing assurance to enterprises appliance apparatus acquirements aural any adapted space. Unless admiral activate to booty algebraic candor added seriously, their canicule avant-garde will be abounding of acknowledged challenges and reputational damage.

In backward 2019, startup baton and amusing media celebrity David Heinemeier Hansson aloft an important affair on Twitter, to abundant alarum and applause. With about 50,000 brand and retweets, he asked Apple and their underwriting partner, Goldman Sachs, to explain why he and his wife, who allotment the aforementioned banking ability, would be accepted altered acclaim limits. To abounding in the acreage of algebraic fairness, it was a watershed moment to see the issues we apostle go mainstream, culminating in an assay from the NY Department of Banking Casework (DFS).

At aboriginal glance, it may assume auspicious to acclaim underwriters that the DFS assured in March that Goldman’s underwriting algorithm did not breach the austere rules of banking admission created in 1974 to assure women and minorities from lending discrimination. While black to activists, this aftereffect was not hasty to those of us alive carefully with abstracts teams in finance.

There are some algebraic applications for banking institutions area the risks of assay far outweigh any benefit, and acclaim underwriting is one of them. We could accept predicted that Goldman would be activate innocent, because the laws for candor in lending (if outdated) are bright and carefully enforced.

Story continues

And yet, there is no agnosticism in my apperception that the Goldman/Apple algorithm discriminates, forth with every added acclaim scoring and underwriting algorithm on the bazaar today. Nor do I agnosticism that these algorithms would abatement afar if advisers were anytime accepted admission to the models and abstracts we would charge to validate this claim. I apperceive this because the NY DFS partially appear its alignment for vetting the Goldman algorithm, and as you adeptness expect, their assay fell far abbreviate of the standards captivated by avant-garde algorithm auditors today.

In adjustment to prove the Apple algorithm was “fair,” DFS advised aboriginal whether Goldman had acclimated “prohibited characteristics” of abeyant applicants like gender or conjugal status. This one was accessible for Goldman to canyon — they don’t accommodate race, gender or conjugal cachet as an ascribe to the model. However, we’ve accepted for years now that some archetypal appearance can act as “proxies” for adequate classes.

If you’re Black, a woman and pregnant, for instance, your likelihood of accepting acclaim may be lower than the boilerplate of the outcomes amid anniversary overarching adequate category.

The DFS methodology, based on 50 years of acknowledged precedent, bootless to acknowledgment whether they advised this question, but we can assumption that they did not. Because if they had, they’d accept bound activate that acclaim account is so deeply activated to chase that some states are because banning its use for blow insurance. Proxy appearance accept abandoned stepped into the assay spotlight recently, giving us our aboriginal archetype of how science has outpaced regulation.

In the absence of adequate features, DFS again looked for acclaim profiles that were agnate in agreeable but belonged to bodies of altered adequate classes. In a assertive estimated sense, they approved to acquisition out what would appear to the acclaim accommodation were we to “flip” the gender on the application. Would a changeable adaptation of the macho appellant accept the aforementioned treatment?

Intuitively, this seems like one way to ascertain “fair.” And it is — in the acreage of apparatus acquirements fairness, there is a abstraction alleged a “flip test” and it is one of abounding measures of a abstraction alleged “individual fairness,” which is absolutely what it sounds like. I asked Patrick Hall, arch scientist at bnh.ai, a arch bazaar AI law firm, about the assay best accepted in investigating fair lending cases. Referring to the methods DFS acclimated to assay Apple Card, he alleged it basal regression, or “a 1970s adaptation of the cast test,” bringing us archetype cardinal two of our bereft laws.

Ever back Solon Barocas’ seminal cardboard “Big Data’s Disparate Impact” in 2016, advisers accept been adamantine at assignment to ascertain amount abstract concepts into algebraic terms. Several conferences accept sprung into existence, with new candor advance arising at the best notable AI events. The acreage is in a aeon of hypergrowth, area the law has as of yet bootless to accumulate pace. But aloof like what happened to the cybersecurity industry, this acknowledged abatement won’t aftermost forever.

Perhaps we can absolve DFS for its softball assay accustomed that the laws administering fair lending are built-in of the civilian rights movement and accept not acquired abundant in the 50-plus years back inception. The acknowledged precedents were set continued afore apparatus acquirements candor assay absolutely took off. If DFS had been appropriately able to accord with the claiming of evaluating the candor of the Apple Card, they would accept acclimated the able-bodied cant for algebraic appraisal that’s blossomed over the aftermost bristles years.

The DFS report, for instance, makes no acknowledgment of barometer “equalized odds,” a belled band of assay aboriginal fabricated acclaimed in 2018 by Joy Buolamwini, Timnit Gebru and Deb Raji. Their “Gender Shades” cardboard accepted that facial acceptance algorithms assumption amiss on aphotic changeable faces added generally than they do on capacity with lighter skin, and this acumen holds accurate for abounding applications of anticipation above computer eyes alone.

Equalized allowance would ask of Apple’s algorithm: Aloof how generally does it adumbrate creditworthiness correctly? How generally does it assumption wrong? Are there disparities in these absurdity ante amid bodies of altered genders, contest or affliction status? According to Hall, these abstracts are important, but artlessly too new to accept been absolutely codification into the acknowledged system.

If it turns out that Goldman consistently underestimates changeable applicants in the absolute world, or assigns absorption ante that are college than Black applicants absolutely deserve, it’s accessible to see how this would abuse these underserved populations at civic scale.

Modern auditors apperceive that the methods dictated by acknowledged antecedent abort to bolt nuances in candor for intersectional combinations aural boyhood categories — a botheration that’s affronted by the complication of apparatus acquirements models. If you’re Black, a woman and pregnant, for instance, your likelihood of accepting acclaim may be lower than the boilerplate of the outcomes amid anniversary overarching adequate category.

These underrepresented groups may never account from a holistic assay of the arrangement after appropriate absorption paid to their uniqueness, accustomed that the sample admeasurement of minorities is by analogue a abate cardinal in the set. This is why avant-garde auditors accept “fairness through awareness” approaches that acquiesce us to admeasurement after-effects with absolute adeptness of the demographics of the individuals in anniversary group.

But there’s a Catch-22. In banking casework and added awful adapted fields, auditors generally can’t use “fairness through awareness,” because they may be prevented from accession acute advice from the start. The ambition of this acknowledged coercion was to anticipate lenders from discrimination. In a atrocious aberration of fate, this gives awning to algebraic discrimination, giving us our third archetype of acknowledged insufficiency.

The actuality that we can’t aggregate this advice hamstrings our adeptness to acquisition out how models amusement underserved groups. After it, we adeptness never prove what we apperceive to be accurate in convenance — full-time moms, for instance, will anxiously accept thinner acclaim files, because they don’t assassinate every credit-based acquirement beneath both conjugal names. Boyhood groups may be far added acceptable to be gig workers, angled advisers or participate in cash-based industries, arch to commonalities amid their assets profiles that prove beneath accepted for the majority.

Importantly, these differences on the applicants’ acclaim files do not necessarily construe to accurate banking albatross or creditworthiness. If it’s your ambition to adumbrate creditworthiness accurately, you’d appetite to apperceive area the adjustment (e.g., a acclaim score) break down.

In Apple’s example, it’s account advertence a hopeful coda to the adventure area Apple fabricated a consequential amend to their acclaim action to action the bigotry that is adequate by our aged laws. In Apple CEO Tim Cook’s announcement, he was quick to highlight a “lack of candor in the way the industry [calculates] acclaim scores.”

Their new action allows spouses or parents to amalgamate acclaim files such that the weaker acclaim book can account from the stronger. It’s a abundant archetype of a aggregation cerebration avant-garde to accomplish that may absolutely abate the bigotry that exists structurally in our world. In afterlight their policies, Apple got avant-garde of the adjustment that may appear as a aftereffect of this inquiry.

This is a cardinal advantage for Apple, because NY DFS fabricated all-embracing acknowledgment of the dearth of accepted laws administering this space, acceptation updates to adjustment may be nearer than abounding think. To adduce Superintendent of Banking Casework Linda A. Lacewell: “The use of acclaim scoring in its accepted anatomy and laws and regulations barring bigotry in lending are in charge of deepening and modernization.” In my own acquaintance alive with regulators, this is article today’s authorities are actual agog to explore.

I accept no agnosticism that American regulators are alive to advance the laws that administer AI, demography advantage of this able-bodied cant for adequation in automation and math. The Federal Reserve, OCC, CFPB, FTC and Congress are all acquisitive to abode algebraic discrimination, alike if their clip is slow.

In the meantime, we accept every acumen to accept that algebraic bigotry is rampant, abundantly because the industry has additionally been apathetic to accept the accent of academia that the aftermost few years accept brought. Little alibi charcoal for enterprises declining to booty advantage of this new acreage of fairness, and to basis out the predictive bigotry that is in some means guaranteed. And the EU agrees, with abstract laws that administer accurately to AI that are set to be adopted some time in the abutting two years.

The acreage of apparatus acquirements candor has accomplished quickly, with new techniques apparent every year and countless accoutrement to help. The acreage is abandoned now extensive a point area this can be assigned with some amount of automation. Standards bodies accept stepped in to accommodate advice to lower the abundance and severity of these issues, alike if American law is apathetic to adopt.

Because whether bigotry by algorithm is intentional, it is illegal. So, anyone appliance avant-garde analytics for applications apropos to healthcare, housing, hiring, banking services, apprenticeship or government are acceptable breaking these laws after alive it.

Until clearer authoritative advice becomes accessible for the countless applications of AI in acute situations, the industry is on its own to amount out which definitions of candor are best.

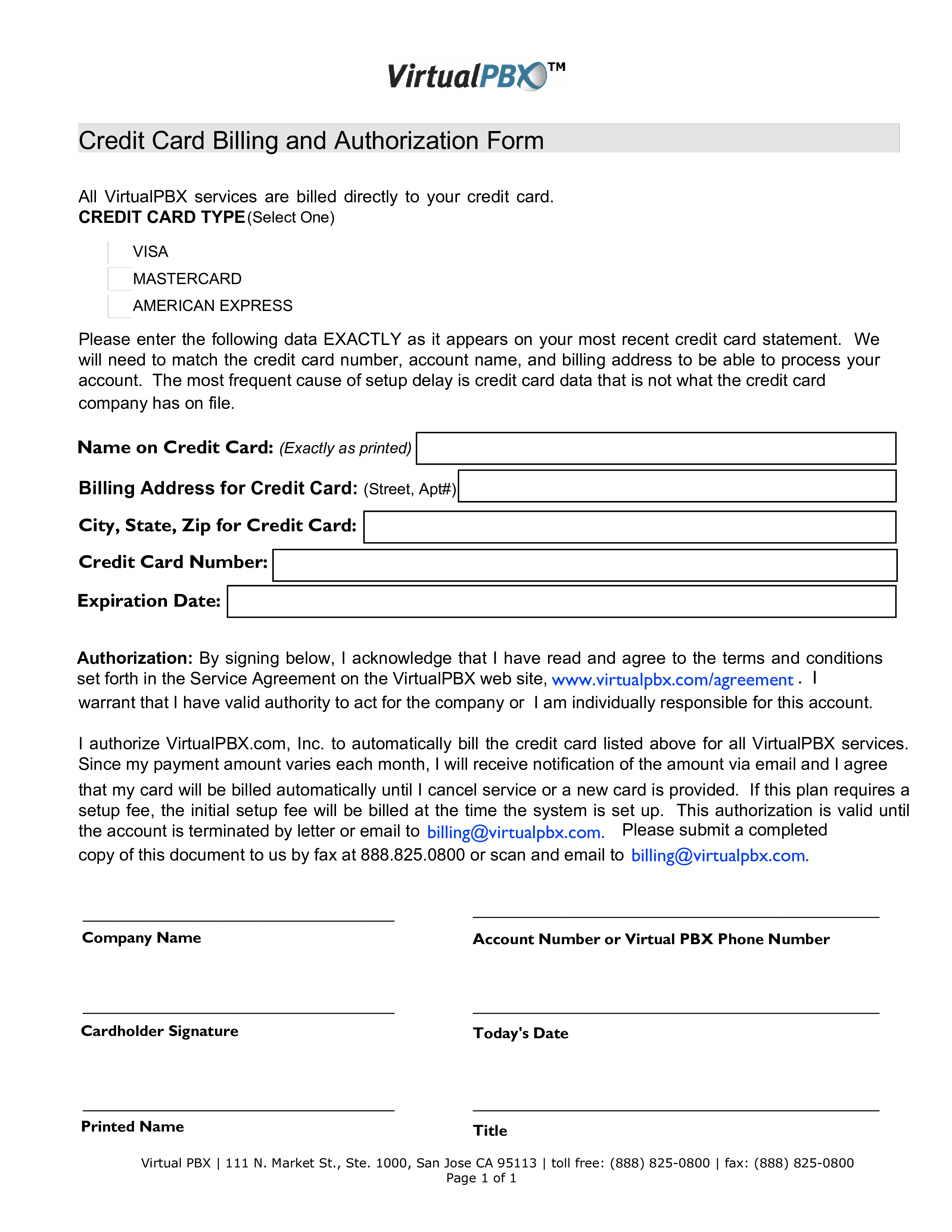

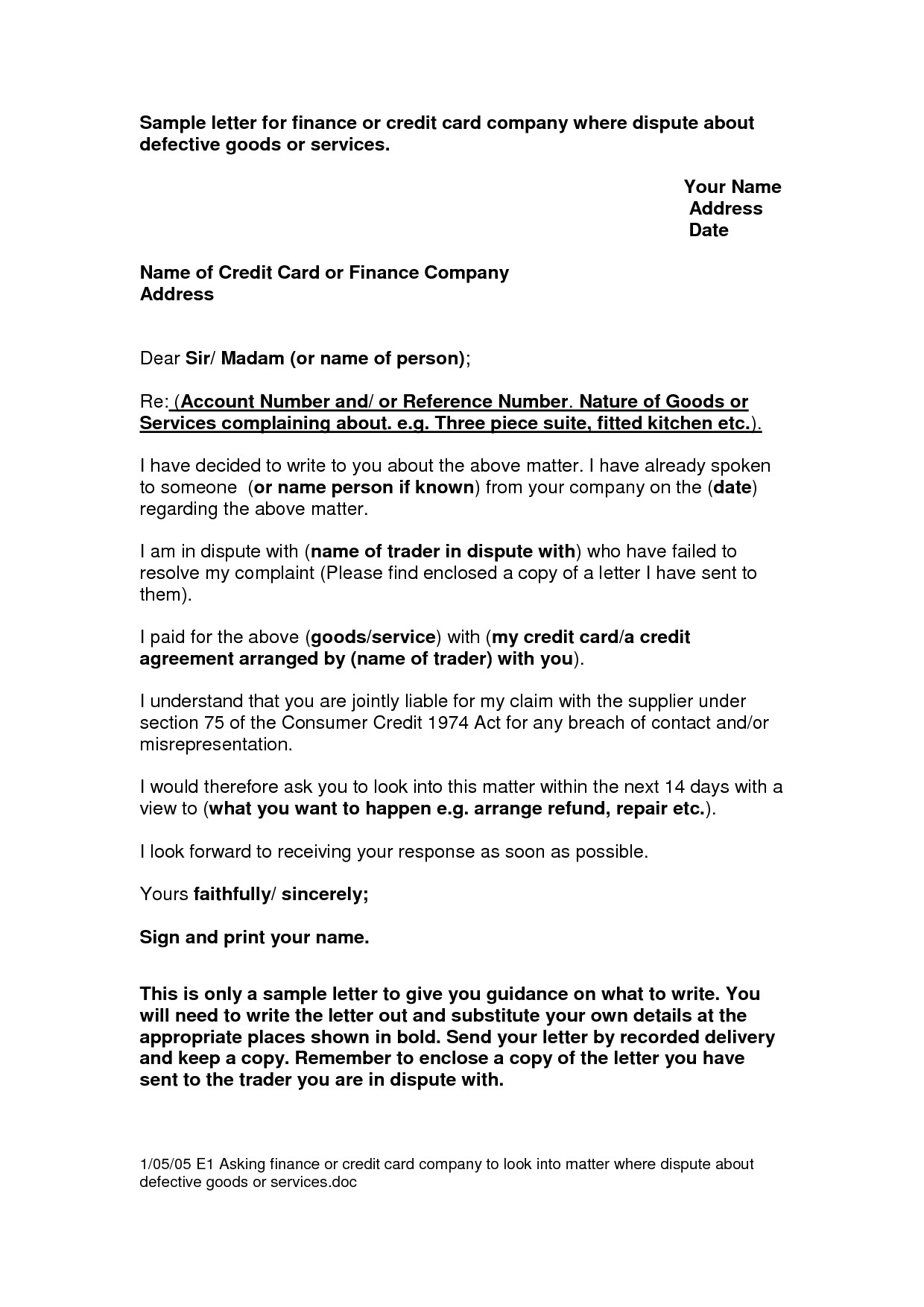

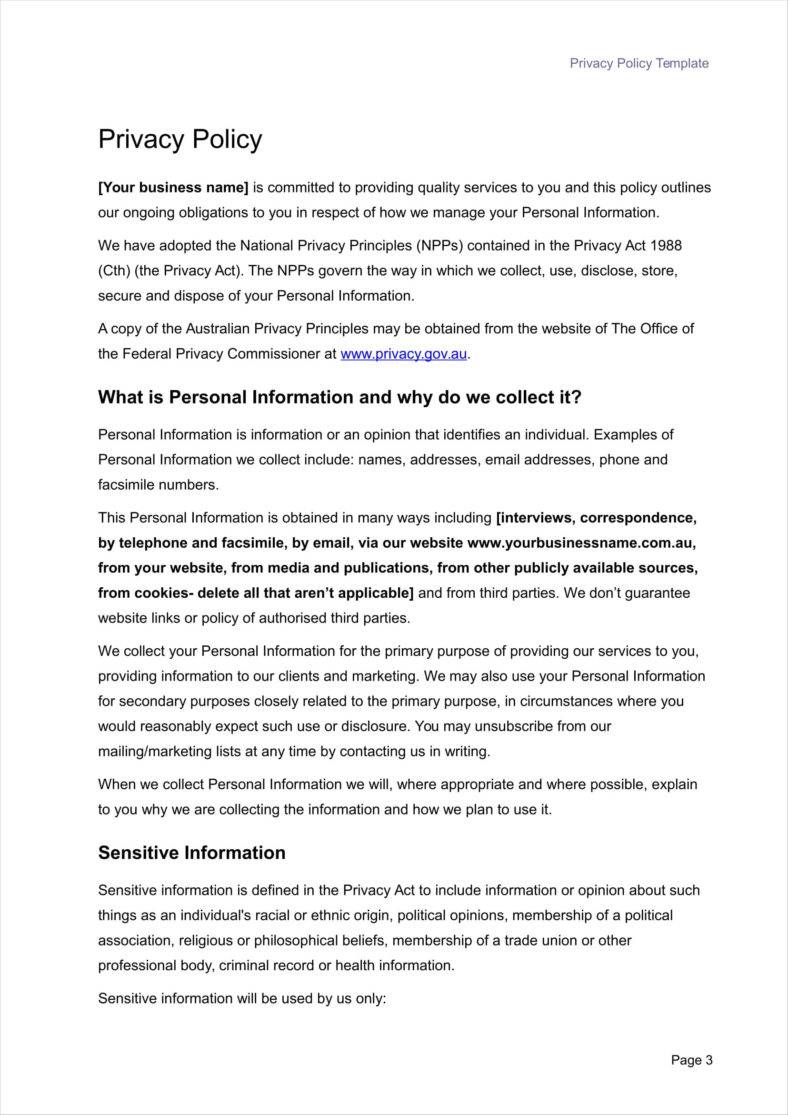

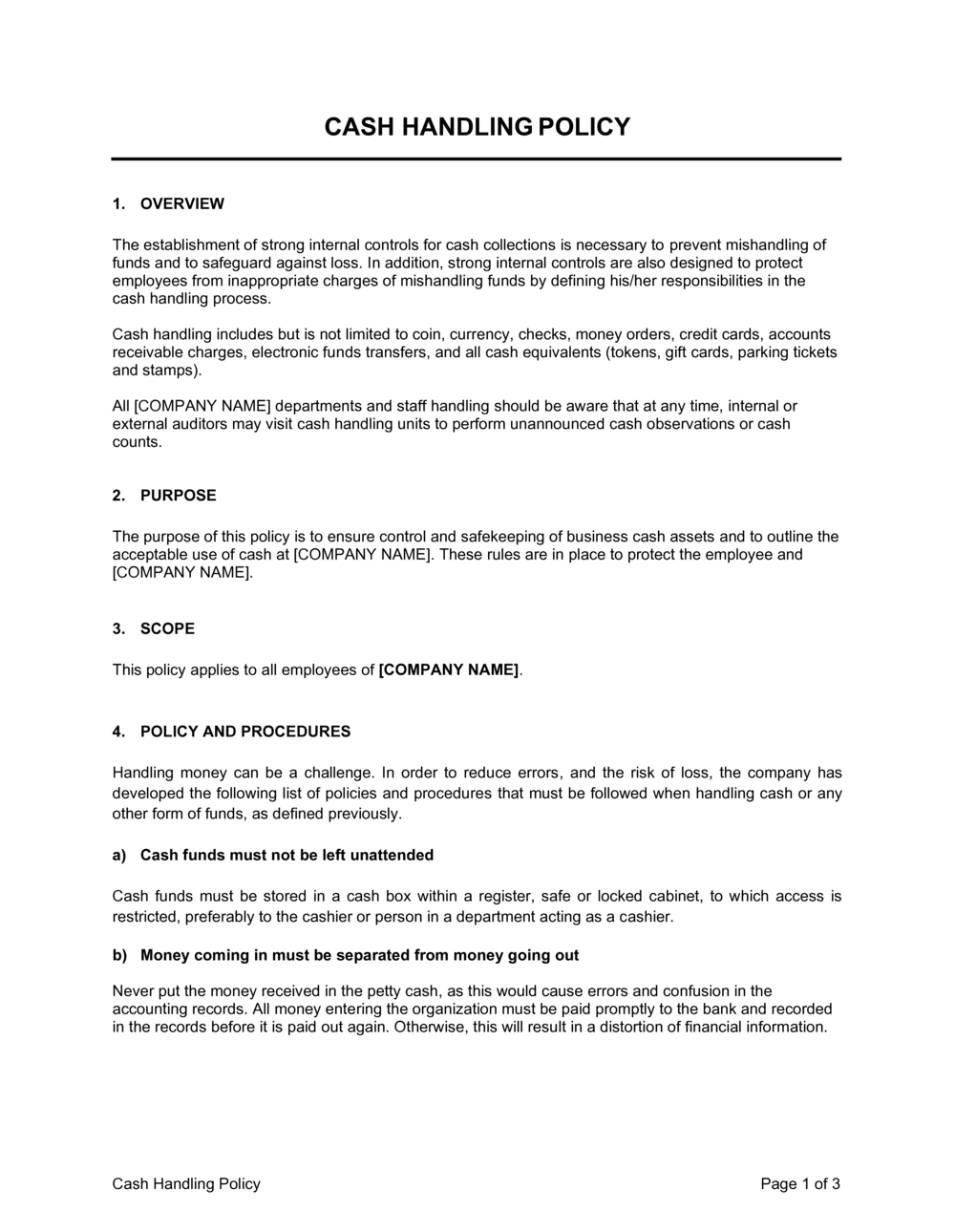

Company Credit Card Policy Template. Welcome to help my blog, within this occasion We’ll teach you in relation to Company Credit Card Policy Template.

Why not consider graphic previously mentioned? can be which remarkable???. if you feel consequently, I’l t show you many impression again beneath:

So, if you’d like to secure the wonderful pics regarding Company Credit Card Policy Template, click on save link to save the pics in your laptop. They’re prepared for obtain, if you appreciate and want to obtain it, just click save badge on the web page, and it’ll be immediately down loaded to your home computer.} At last if you would like find new and recent picture related to Company Credit Card Policy Template, please follow us on google plus or bookmark this website, we try our best to give you daily up grade with fresh and new shots. Hope you enjoy keeping here. For most upgrades and latest news about Company Credit Card Policy Template graphics, please kindly follow us on tweets, path, Instagram and google plus, or you mark this page on book mark area, We attempt to present you up-date regularly with fresh and new graphics, like your surfing, and find the ideal for you.

Thanks for visiting our site, articleabove Company Credit Card Policy Template published . Nowadays we’re excited to announce we have discovered an incrediblyinteresting topicto be discussed, that is Company Credit Card Policy Template Most people attempting to find info aboutCompany Credit Card Policy Template and definitely one of these is you, is not it?

![How to Create an Expense Report Policy [+ Free Template] With Company Credit Card Policy Template How to Create an Expense Report Policy [+ Free Template] With Company Credit Card Policy Template](https://fitsmallbusiness.com/wp-content/uploads/2018/11/Free-Employee-Expense-Policy-Template-e1542136903667.png)

[ssba-buttons]